Dataset

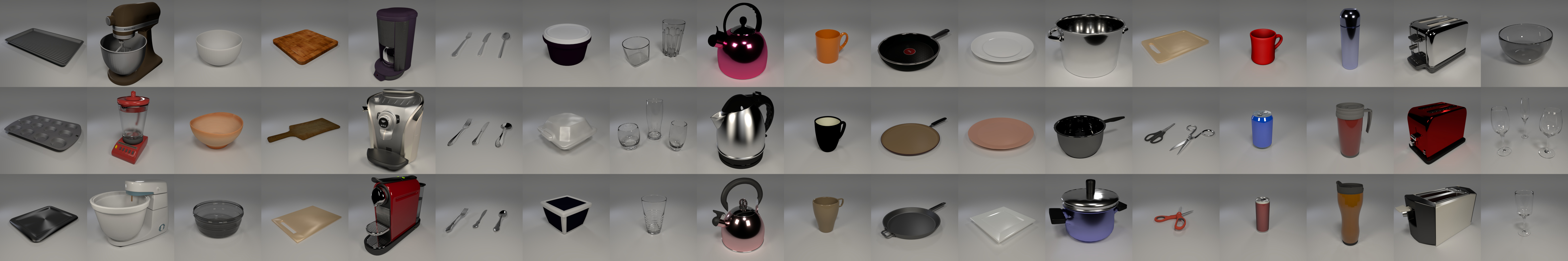

SHOP-VRB (Simple Household Object Properties) provides a benchmark for visual reasoning and recovering structured, semantic representation of a scene. Our dataset builds on CLEVR benchmark, which contains synthetically generated images and questions related to simple geometrical objects as well as their composition in clear background. SHOP-VRB provides scenes with various kitchen objects and appliances, including articulated ones, along with questions associated with those scenes. Each class of objects is provided with a set of short natural language descriptions expanding dataset with visual-textual questions. Apart from classical split into training, validation and test, we suggest another split - benchmark containing unseen objects of known classes as the measure of generalisability.

Statistics

- 66 objects belonging to 20 classes

- 20 objects exclusive for benchmark split

- 11 short text description for each class

- Overlaps between splits less than 1.5%

| training | validation | test | benchmark | |

|---|---|---|---|---|

| Images | 10000 | 1500 | 1500 | 1500 |

| Questions (visual) | 199952 | 30000 | 30000 | 30000 |

| Question (textual) | 100000 | 15000 | 15000 | 15000 |

Publications

SHOP-VRB: A Visual Reasoning Benchmark for Object Perception

Michal Nazarczuk, Krystian Mikolajczyk

Published at ICRA 2020

@article{nazarczuk2020shop,

title={SHOP-VRB: A Visual Reasoning Benchmark for Object Perception},

author={Nazarczuk, Michal and Mikolajczyk, Krystian},

journal={International Conference on Robotics and Automation (ICRA)},

year={2020}

}

Codebase

- Dataset generation code

- Use to generate new scenes

- Use to generate new questions along with ground truth answers

Credits

Dataset prepared with the use of Blender model under Creative Commons licence. Models were modify for the purpose of creating images of kitchen environment. The following list contains models under Attribution 2.0 Generic (CC BY 2.0) license along with original authors.

- Baking tray by elbrujodelatribu

- Blender by Jedrush

- Coffee makers by 3DHaupt and unangelo

- Cutlery sets by miguelromeroh and Wig42

- Glasses by Michal David and b2przemo

- Kettles by PrinterKiller and Wig42

- Mug by NazzarenoGiannelli

- Pan by fransteddy

- Pots by the4thworld and anjargaluh

- Scissors by cbas

- Toasters by PrinterKiller and specsdude

- Wine glasses by YellowPanda

All other models are freely available for public domain.